NIST (National Institute of Standards and Technology) has published an initial public draft of its Artificial Intelligence Risk Management Framework, as part of an effort to implement President Biden’s executive order on AI.

The draft publications cover a range of concerns from addressing the problem of malicious training data to promoting transparency in digital content. They are aimed at helping improve the safety, security, and trustworthiness of artificial intelligence (AI) systems and one of the drafts proposes a plan for developing global AI standards.

NIST Highlights AI-Augmented Hacking, Malware, and Phishing as Emerging Security Threats#

The document for “Mitigating the Risks of Generative AI” is the one most relevant for cybersecurity professionals. It’s clear the U.S. federal government has autonomous exploits on its radar, among other threats.

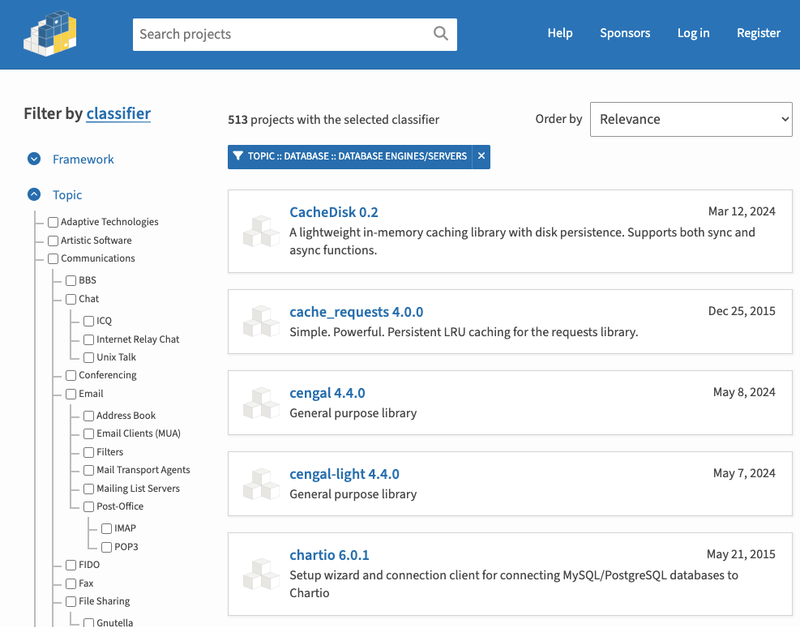

Outlined in the overview of risks unique to or exacerbated by GAI (Generative AI), NIST identified a host of concerns related to information security:

Lowered barriers for offensive cyber capabilities, including ease of security attacks, hacking, malware, phishing, and offensive cyber operations through accelerated automated discovery and exploitation of vulnerabilities; increased available attack surface for targeted cyber attacks, which may compromise the confidentiality and integrity of model weights, code, training data, and outputs.

The document highlights the potential augmentation of offensive cyber capabilities like hacking, malware, and phishing. It cites the recent publication from researchers claiming to have created a GPT-4 agent that can autonomously exploit both web and non-web vulnerabilities in real-world systems. Some regard these as emerging AI capabilities, but even if they aren't - autonomous exploits are undoubtedly coming in the near future.

Prompt-injection and data poisoning are identified as some of the most concerning GAI vulnerabilities, which could cause affected systems to precipitate “a variety of downstream consequences to interconnected systems.”

Security researchers have already demonstrated how indirect prompt injections can steal data and run code remotely on a machine. Merely querying a closed production model can elicit previously undisclosed information about that model. Information security for GAI models and systems also includes security, confidentiality, and integrity of the GAI training data, code, and model weights.

The document was created over the past year and includes feedback from NIST’s generative AI public working group of more than 2,500 members. It focuses on a list of 13 risks and identifies more than 400 actions developers can take to manage them. These actions include some best practices and recommendations for information security with respect to supply chains:

- Apply established security measures to: Assess risks of backdoors, compromised dependencies, data breaches, eavesdropping, man-in-the-middle attacks, reverse engineering other baseline security concerns;

- Audit supply chains to identify risks arising from, e.g., data poisoning and malware, software and hardware vulnerabilities, third-party personnel and software;

- Audit GAI systems, pipelines, plugins and other related artifacts for unauthorized access, malware, and other known vulnerabilities.

Many of the recommended actions pertain to securing generative AI systems:

- Establish policies to incorporate adversarial examples and other provenance attacks in AI model training processes to enhance resilience against attacks.

- Implement plans for GAI systems to undergo regular adversarial testing to identify vulnerabilities and potential manipulation risks.

- Conduct adversarial role-playing exercises, AI red-teaming, or chaos testing to identify anomalous or unforeseen failure modes.

- Conduct security assessments and audits to measure the integrity of training data, system software, and system outputs.

In their current form, the drafts published this week are aimed at helping organizations make decisions on how best to manage AI risk in a way that will coincide with what may become future regulatory requirements. The recommendations will likely contribute to shaping best practices for securing AI systems and software as the technology rapidly advances in capabilities.

The documents were published as drafts that are open to comment from the public via email or on the www.regulations.gov website. NIST is particularly interested in feedback on the glossary terms, risk list, and proposed actions for mitigation. Comments will close on June 2, 2024, ahead of the document’s publication in the Federal Register Notice.